It’s time for a modern metadata solution, one that is just as fast, flexible, and scalable as the rest of the modern data stack

2020 brought a lot of new words into our everyday vocabulary — think coronavirus, defund, and malarkey. But in the data world, another phrase has been making the rounds… the modern data stack.

The data world has recently converged around the best set of tools for dealing with massive amounts of data, aka the “modern data stack”. This includes setting up data infrastructure on best-of-breed tools like Snowflake for data warehousing, Databricks for data lakes, and Fivetran for data ingestion.

The good? The modern data stack is super fast, easy to scale up in seconds, and requires little overhead. The bad? It’s still a noob in terms of bringing governance, trust and context to data.

That’s where metadata comes in.

So what should modern metadata look like in today’s modern data stack? How can basic data catalogs evolve into a powerful vehicle for data democratization and governance? Why does metadata management need a paradigm shift to keep up with today’s needs?

In the past year, I’ve spoken to over 350 data leaders to understand their fundamental challenges with existing metadata management solutions and construct a vision for modern metadata management. I like to call this approach “Data Catalog 3.0”.

Why does the modern data stack need “modern” metadata management more than ever?

A few years ago, data would primarily be consumed by the IT team in an organization. However, today data teams are more diverse than ever — data engineers, analysts, analytics engineers, data scientists, product managers, business analysts, citizen data scientists, and more. Each of these people have their own favorite and equally diverse data tools, everything from SQL, Looker, and Jupyter to Python, Tableau, dbt, and R.

This diversity is both a strength and struggle. All of these people have different ways of approaching a problem, tools, skill sets, tech stacks, ways of working… essentially, they each have a unique “data DNA”.

The result is often chaos within collaboration. Frustrated questions like “What does this column name actually mean?” and “Why are the sales numbers on the dashboard wrong again?” bring speedy teams to a crawl when they need to use data.

These questions aren’t anything new. After all, Gartner has published its Magic Quadrant for Metadata Management Solutions for over 5 years now.

But there’s still no good solution. Most data catalogs are little more than band-aid solutions from the Hadoop era, rather than keeping in step with the innovation and advances behind today’s modern data stack.

The past and future of metadata management

Just like data, how we think about and work with metadata has steadily evolved over the past three decades. It can be broadly broken down into three stages of evolution: Data Catalog 1.0, Data Catalog 2.0, and Data Catalog 3.0.

Data Catalog 1.0: Metadata management for IT teams

Time: 1990s and 2000s

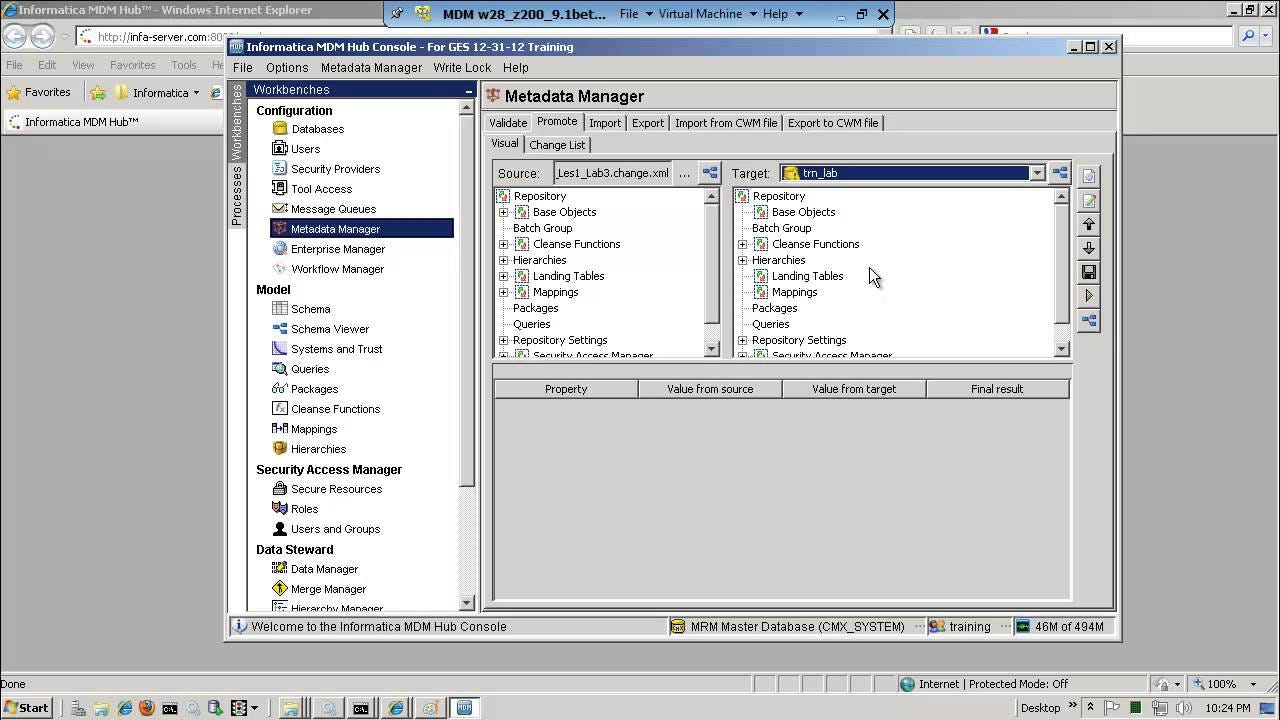

Products: Informatica, Talend

Metadata has technically been around since ancient times — e.g. descriptive tags attached to each scroll in the Library of Alexandria. However, the modern idea of metadata dates back to the late 1900s.

In the 1990s, we thankfully set aside floppy disks and embraced this newfangled tool called the internet. Soon enough, big data and data science were all the rage, and organizations were trying to figure out how to organize their new collections of data.

As data types and formats and, well, data itself exploded, IT teams were put in charge of creating an “inventory of data”. Companies like Informatica took an early lead in metadata management, but setting up and keeping on top of their new data catalogues was a constant struggle for IT folks.

Data warehouse teams often spend an enormous amount of time talking about, worrying about, and feeling guilty about metadata. Since most developers have a natural aversion to the development and orderly filing of documentation, metadata often gets cut from the project plan despite everyone’s acknowledgment that it is important.

Ralph Kimball, 2002

Data Catalog 2.0: Data inventory powered by data stewards

Time: 2010s

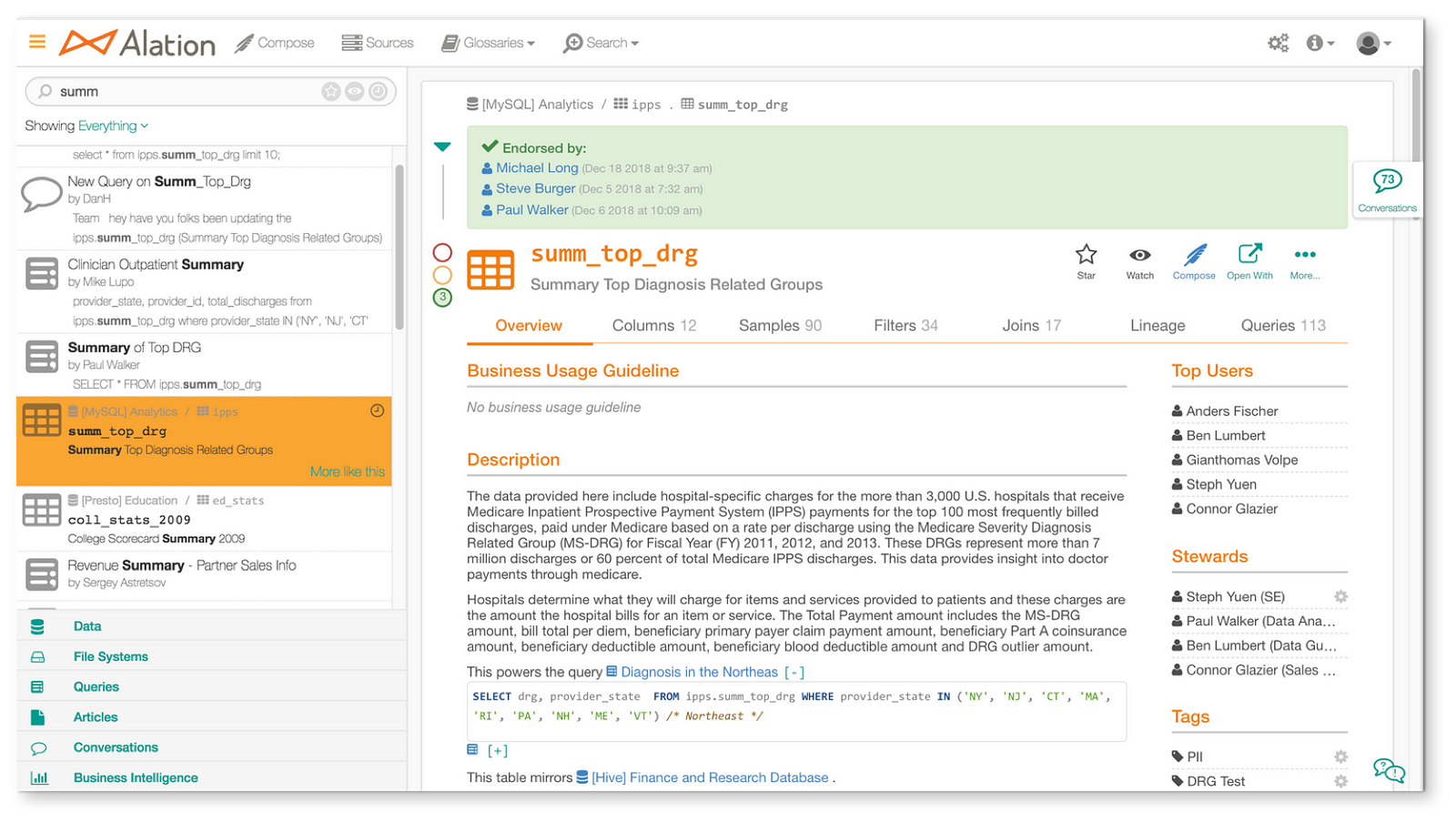

Products: Collibra, Alation

As data became more mainstream and spread beyond the IT team, the idea of Data Stewardship took root. This referred to a dedicated suite of people who were responsible for taking care of an organization’s data. They would handle metadata, maintain governance practices, manually document data, and so on.

Meanwhile, the idea of metadata shifted. As companies started setting up massive Hadoop implementations, they realized that a simple IT inventory of data wasn’t enough anymore. Instead, new data catalogs needed to blend data inventory with new business context.

Just like the uber-complex Hadoop systems of this era, Data Catalog 2.0s were difficult to set up and maintain. They involved rigid data governance committees, formal data stewards, complex technology set-ups, and lengthy implementation cycles. All in all, this process could take up to 18 months.

Tools in this era were built on fundamentally monolith architectures and deployed on-premise. Each data system would have its own installation, and companies couldn’t roll out software changes by pushing a simple cloud update.

Technical debt grew, and metadata management steadily started falling behind the rest of the modern data stack.

The need for a paradigm shift in metadata

While the rest of the data infrastructure stack has evolved in the past few years and tools like Fivetran and Snowflake let users set up a data warehouse in less than 30 minutes, data catalogs couldn’t keep up. Even trying out metadata tools from the Data Catalog 2.0 era involves significant engineering time for setup, not to mention at least 5 calls with a sales rep to get a demo.

Due to the lack of viable alternatives, the earliest adopters of the modern data stack and most large tech companies resorted to building their own in-house solutions. Some notable examples include Airbnb’s Dataportal, Facebook’s Nemo, LinkedIn’s DataHub, Lyft’s Amundsen, Netflix’s Metacat, and Uber’s Databook.

However, not all companies have the engineering resources to do this, and it’s not particularly efficient to build dozens of similar metadata tools.

It’s time for a modern metadata solution, one that is just as fast, flexible, and scalable as the rest of the modern data stack.

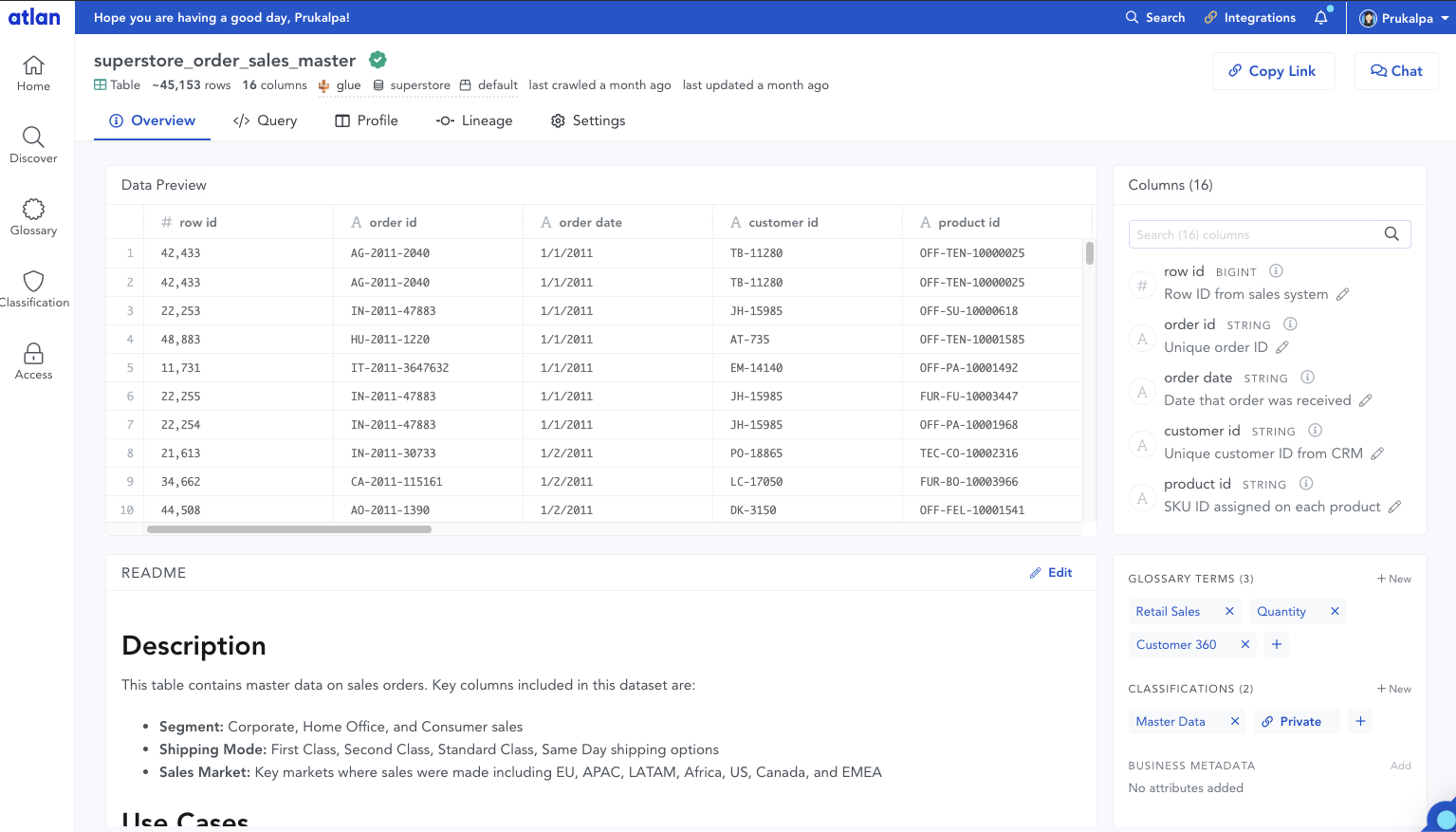

Data Catalog 3.0: Collaborative workspaces for diverse data users

Today we’re at an inflection point in metadata management — a shift from the slow, on-premise Data Catalog 2.0 to the start of a new era, Data Catalog 3.0. Just like the jump from 1.0 to 2.0, this will be a fundamental shift in how we think about metadata.

Data Catalog 3.0s will not look and feel like their predecessors in the Data Catalog 2.0 generation. Instead, Data Catalog 3.0s will be built on the premise of embedded collaboration that is key in today’s modern workplace, borrowing principles from Github, Figma, Slack, Notion, Superhuman, and other modern tools that are commonplace today.

The 4 characteristics of Data Catalog 3.0

1. Data assets > tables

The Data Catalog 2.0 generation was built on the premise that “tables” were the only asset that needed to be managed. But that’s completely different now.

Nowadays, BI dashboards, code snippets, SQL queries, models, features and Jupyter notebooks are all data assets.

The 3.0 generation of metadata management will need to be flexible enough to intelligently store and link all these different types of data assets in one place.

2. End-to-end data visibility, rather than piecemeal solutions

Tools from the Data Catalog 2.0 era made significant strides in improving data discovery. However, they didn’t give organizations a “single source of truth” for their data. Information about data assets is usually spread across different places — data lineage tools, data quality tools, data prep tools, and more.

The Data Catalog 3.0 will help teams finally achieve the holy grail, a single source of truth about every data asset in the organization.

3. Built for world where metadata itself is “big data”

We’re fast approaching a world where metadata itself will be big data. Being able to process and understand metadata will help teams understand and trust their data better.

That’s why the new Data Catalog 3.0 should be more than just a metadata storage.

It should fundamentally leverage metadata as a form of data that can be searched, analyzed and maintained in the same way as all other types of data.

Today the fundamental elasticity of the cloud makes this possible like never before. For example, query logs are just one kind of metadata available today. By parsing through the SQL code from query logs in Snowflake, it’s possible to automatically create column-level lineage, assign a popularity score to every data asset, and even deduce the potential owners and experts for each asset.

4. Embedded collaboration comes of age

Airbnb said something profound when sharing their learnings about driving adoption of their internal data portal: “Designing the interface and user experience of a data tool should not be an afterthought.”

Because of the fundamental diversity in data teams, data tools need to be designed to integrate seamlessly with teams’ daily workflow.

This is where the idea of embedded collaboration really comes alive. Embedded collaboration is about work happening where you are, with the least amount of friction.

What if you could request access to a data asset when you get a link, just like with Google Docs, and the owner could get the request on Slack and approve or reject it right there? Or what if, when you’re inspecting a data asset and need to report an issue, you could immediately trigger a support request that’s perfectly integrated with your engineering team’s JIRA workflow?

Embedded collaboration can unify dozens of these micro-workflows that waste time, cause frustration, and lead to tool fatigue for data teams, and instead make these tasks delightful!

What’s next?

Anyone who works with data knows that it’s long past time for data catalogs to catch up with the rest of the modern data stack. After all, data is pretty meaningless without the assets that make it understandable — documentation, queries, history, glossaries, etc.

As metadata itself becomes big data, we’re on the cusp of a transformative leap in metadata management. While we don’t know everything about the Data Catalog 3.0 era yet, it’s clear that in the next few years there will be the rise of a modern metadata management product that takes its rightful place in the modern data stack.

This article was originally published in Towards Data Science.